After coming under increasing pressure and criticism for the number of disturbing videos published on the platform, YouTube has decided to start releasing quarterly enforcement reports. YouTube wants to use these reports to show the progress it is making as it tries to remove volatile content from its platform.

YouTube has been struggling to address the fact that disturbing and distressing videos, aimed primarily at children, have been turning up on the site for some time now. Despite cracking down on this type of content, it is still popping up, and Wired was still able to find some as recently as March.

The first quarterly enforcement report, covering October to December 2017, has just been published. According to the report, during that period, YouTube removed 8.3 million videos. Of that 8.3 million, 6.7 million of them were first flagged for review by machines and never even viewed.

Although the AI behind this automatic flagging system has come under criticism due to the videos that have been slipping through, YouTube defended it in the report saying that it enables the video platform to act more quickly to enforce its policies. On top of the automatic flagging system, YouTube celebrated the number of human flags it received to back up the efforts of the AI:

“In addition to our automated flagging systems, Trusted Flaggers and our broader community of users play an important role in flagging content. A single video may be flagged multiple times and may be flagged for different reasons. YouTube received human flags on 9.3 million unique videos in Q4 2017.”

Trusted flaggers are individuals from governments and NGOs who have attended “…a YouTube training to learn about our Community Guidelines and enforcement processes.” Almost 95 percent of human flagging, however, was done by YouTube users themselves.

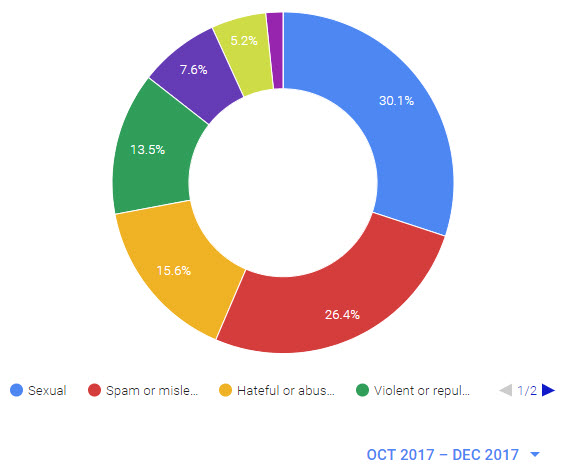

The reasons given for flagged videos varied; including videos that were sexual, spam or misleading, hateful or abusive, and violent or repulsive. The videos that kicked off this whole crisis for YouTube spanned all of those classifications. Other possible reasons for human flagging include harmful dangerous acts, child abuse, and promoting terror.

Not all videos flagged by humans were removed from the platform. The report shows that once a human flags a video, it is reviewed against the community guidelines and only videos that violate those guidelines are subsequently removed.

This first quarterly enforcement report shows that YouTube is taking action to prevent harmful content being published and promoted on the platform. As long as disturbing videos can still be found on the platform, however, YouTube will remain under pressure. If it is committed to providing a platform for people to share video content with the world freely, this is a problem that needs a long-term solution. The work for YouTube is only just beginning.