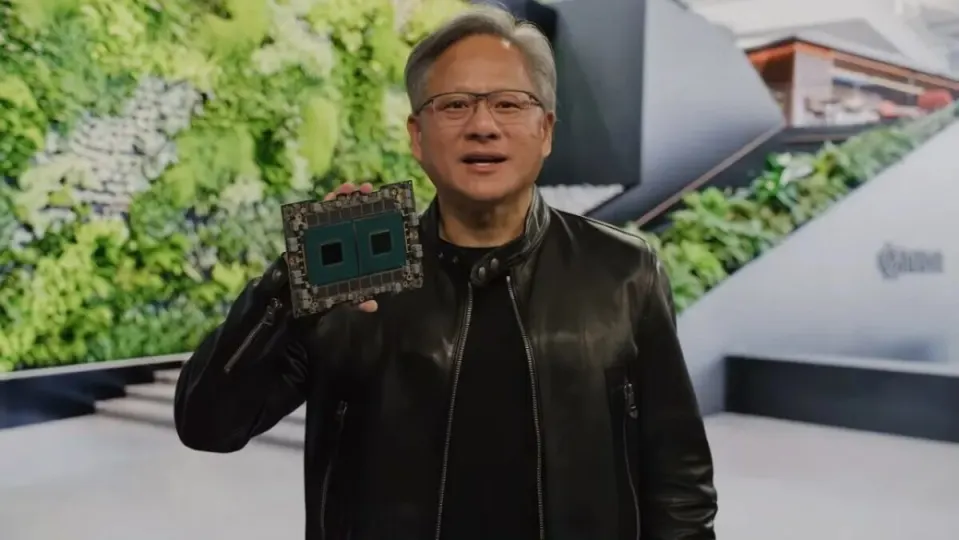

Nvidia has unveiled its latest artificial intelligence (AI) chip called Blackwell B200 which, it claims, can perform certain tasks 30 times faster than its predecessor. This will revolutionize the AI sector in the coming years.

This is how yesterday the American chip company announced that they were launching a server card that would revolutionize the field of AI, thanks to its enormous capabilities and unleashed potential.

The essential Nvidia AI chip H100 made it a billionaire company. And now Nvidia is about to extend its advantage with the new GPU Blackwell B200 and the “superchip” GB200.

How they managed to design the new Blackwell B200 GPU

Nvidia claims that the new B200 GPU offers up to 20 petaflops of FP4 power thanks to its 208 billion transistors. Additionally, it claims that a GB200 combining two of these GPUs with a single Grace CPU can deliver 30 times more performance for LLM inference workloads, while also being substantially more efficient.

According to Nvidia, it “reduces cost and energy consumption by up to 25 times” compared to an H100. According to Nvidia, training a model with 1.8 trillion parameters previously required 8,000 Hopper GPUs and 15 megawatts of power. Today, the CEO of Nvidia claims that 2,000 Blackwell GPUs can do it consuming only four megawatts.

In a GPT-3 LLM test with 175 billion parameters, Nvidia claims that the GB200 has a somewhat more modest performance, seven times higher than that of an H100, and claims to offer four times faster training speed.

Nvidia explained to journalists that one of the key improvements is a second-generation transformer engine that doubles the computation, bandwidth, and model size by using four bits for each neuron instead of eight (hence the 20 petaflops of FP4 I mentioned earlier).

A second key difference only occurs when large amounts of these GPUs are connected: a next-generation NVLink switch that allows 576 GPUs to communicate with each other, with 1.8 terabytes per second of bidirectional bandwidth.

To achieve this, Nvidia has had to create a completely new network switching chip, with 50 billion transistors and part of its own integrated computing capacity: 3.6 teraflops of FP8, says Nvidia.

According to Nvidia, previously a cluster of only 16 GPUs spent 60 percent of its time communicating with each other and only 40 percent on actual computation.

Nvidia expects companies to buy large quantities of these GPUs, of course, and is packaging them in larger designs, such as the GB200 NVL72, which connects 36 CPUs and 72 GPUs in a single liquid-cooled chassis for a total of 720 petaflops of AI training performance or 1,440 petaflops of inference. It has nearly three kilometers of cables inside, with 5,000 individual cables.

We haven’t heard anything about new gaming GPUs, as this news comes from Nvidia’s GPU Technology Conference, which usually focuses almost entirely on GPU Computing and AI. But it’s likely that the Blackwell GPU architecture will also power a future line of desktop graphics cards in the RTX 50 series.