Researchers warn about the use of generative AI to evade malware detection

OpenAI itself has blocked more than 20 malicious networks that were seeking to exploit its platform

- December 25, 2024

- Updated: July 1, 2025 at 10:35 PM

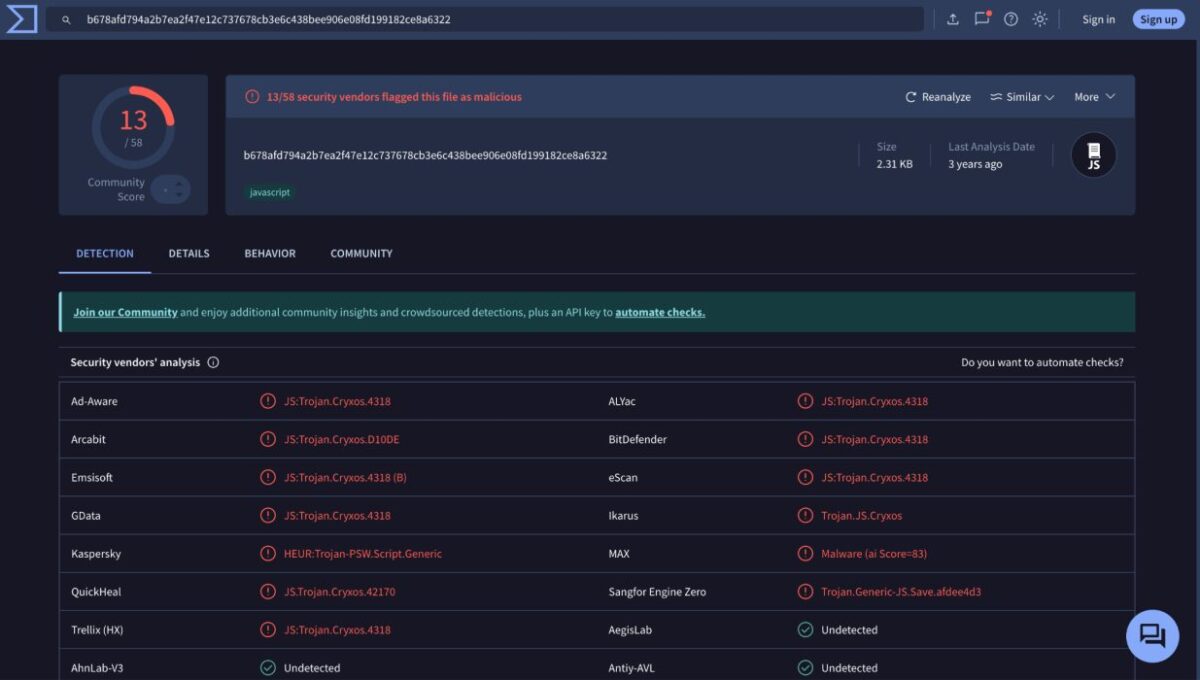

A recent analysis by Unit 42 of Palo Alto Networks has revealed that language models like ChatGPT can be used to modify malware in JavaScript, making it more difficult to detect. Although these models do not generate malware from scratch, cybercriminals can ask them to rewrite or obfuscate existing malicious code. “These transformations are more natural, which complicates the identification of malware,” state cybersecurity experts. This approach could degrade malware classification systems by confusing them into labeling malicious code as harmless.

Subscribe to the Softonic newsletter and get the latest in tech, gaming, entertainment and deals right in your inbox.

Subscribe (it's FREE) ►Despite the security restrictions implemented by providers like OpenAI, tools like WormGPT are being used to create more convincing phishing emails and new types of malware. In October 2024, OpenAI blocked more than 20 malicious networks seeking to exploit its platform. In tests, Unit 42 managed to generate 10,000 variants of malicious JavaScript, maintaining its functionality but decreasing its detection scores in models like Innocent Until Proven Guilty. Among the techniques used are changes in variable names, insertion of junk code, and complete script rewriting.

Additionally, machine learning algorithms can be tricked into classifying these variants as benign in 88% of cases, according to Unit 42. Even the most popular tools, such as VirusTotal, struggle to detect these codes. Researchers warn that these AI-based rewrites are harder to track than those generated by libraries like obfuscator.io. However, they suggest that these same techniques could improve detection models by generating more robust training data.

On another front, researchers from the North Carolina State University discovered an attack called TPUXtract, which allows stealing AI models run on Google‘s Edge TPUs through electromagnetic signals. However, although the technique is notable for its precision, it requires physical access to the device and specialized equipment, which fortunately limits its scope.

Additionally, Morphisec has demonstrated that the Exploit Prediction Scoring System (EPSS), used to assess vulnerabilities, can be manipulated with fake social media posts and empty repositories on GitHub. According to Ido Ikar, this technique allows “inflating indicators” and deceiving organizations by altering cyber risk management priorities.

Publicist and audiovisual producer in love with social networks. I spend more time thinking about which videogames I will play than playing them.

Latest from Pedro Domínguez

- Fraudulent Websites Are on the Rise: Here’s How Avast Free Antivirus Keeps You Safe

- Unplug This Summer Without Compromising Your Digital Security — Get Protected with Avast Free Antivirus

- Have You Ever Stopped to Think About How Much Personal Information You Share Online Every Day?

- National Streaming Day: How On-Demand Entertainment Has Redefined Our Viewing Habits

You may also like

News

NewsRiot Games is getting serious: from now on, if you get banned, you will be banned from all their games at the same time

Read more

News

NewsIt returns to Netflix with its third season an anime that pits Jack the Ripper against Thor in incredible epic battles

Read more

News

NewsChinese hackers are starting to use AI to take control of your computer

Read more

News

NewsThe director of the original movie Airplane! is not very happy with Seth MacFarlane's work

Read more

News

NewsThe director of RRR, the most successful Indian film in recent years, unveils his new feature film that has cost 120 million dollars

Read more

News

NewsUbisoft introduces AI into the workflow of its video games, but it "slips away" in the final version of one of its games and all hell breaks loose

Read more