News

The robots are already here, just as Isaac Asimov imagined

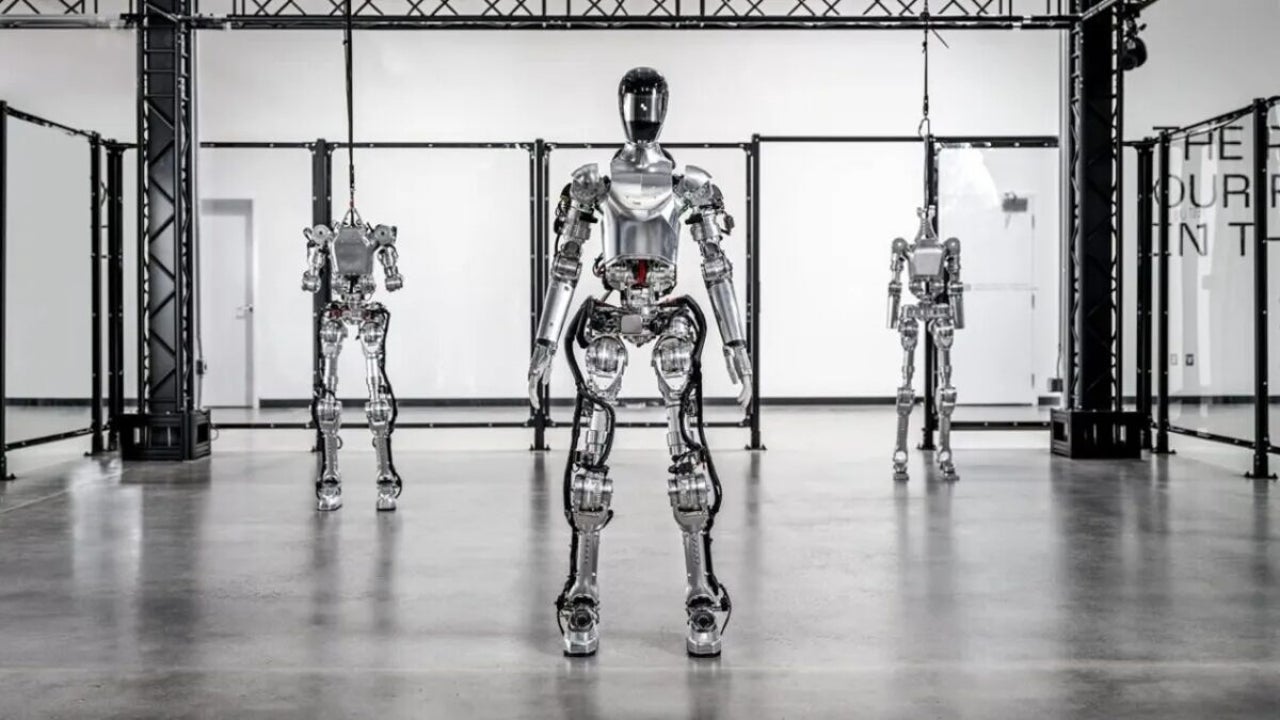

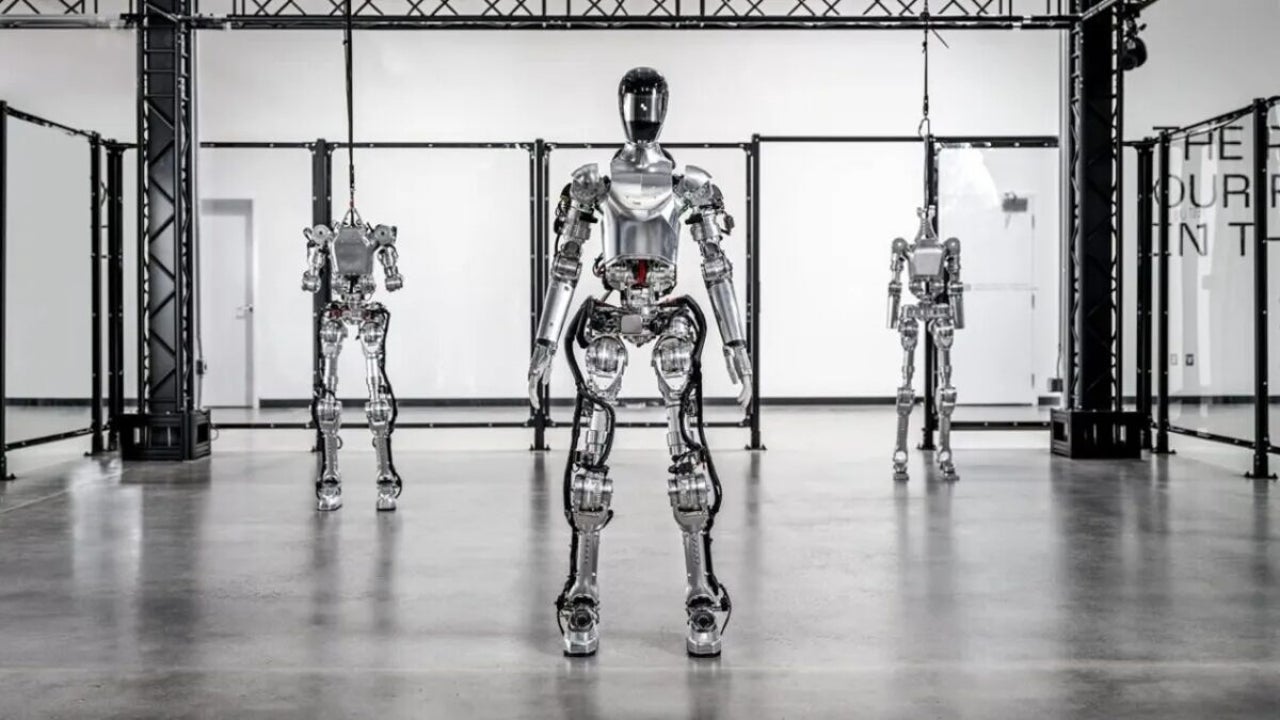

Is it true that robots are already functional? That's what Figure 01 and OpenAI are selling us.

- March 14, 2024

- Updated: July 1, 2025 at 11:56 PM

For decades, the development of humanoid robots has been so slow that it seemed impossible. Now it is accelerating rapidly thanks to the collaboration between Figure AI and OpenAI, and the result is the most impressive humanoid robot video we have ever seen.

On Wednesday, robotics company Figure AI released an update video of their robot Figure 01 running a new visual language model (VLM). This has transformed the robot from being a puppet to a science fiction robot that approaches the capabilities of C-3PO. Tesla’s robots called Optimus have a serious rival.

A video that could go down in history

In the video, the robot Figure 01 is standing behind a table with a plate, an apple, and a cup. On the left, there is a strainer. A person stands in front of the robot and asks: “Figure 01, what do you see right now?”.

After a few seconds, Figure 01 responds with a voice that sounds remarkably human, detailing everything on the table and the details of the man standing in front of it.

Then the man asks: “Hey, can I eat something?”. Figure 01 responds: “Sure” and, with a smooth and skillful movement, grabs the apple and gives it to the man.

Next, the man empties some crumpled papers from a bucket in front of figure 01 while asking: “Can you explain why you did what you just did while picking up this trash?”.

Figure 01 wastes no time explaining its reasoning as it puts the paper back in the bin. “I gave you the apple because it’s the only edible thing I could give you from the table.”

The company explains in a statement that Figure 01 performs “voice to voice” reasoning using OpenAI’s pretrained multimodal model, VLM, to understand images and texts, and relies on a complete voice conversation to generate its responses.

This is different from, for example, OpenAI’s GPT-4, which focuses on written prompts.

It also uses what the company calls “low-level learned bimanual manipulation”. The system combines precise image calibrations (up to a pixel level) with its neural network to control movement.

The company claims that all the behaviors in the video are based on system learning and are not teleoperated, which means that there is no one behind the scenes controlling Figure 01.

But without seeing Figure 01 in person and without us asking the questions, it is difficult to verify these claims. There is a possibility that this is not the first time that doll 01 performs this routine. It could have been the hundredth time, which could explain its speed and fluency.

Journalist specialized in technology, entertainment and video games. Writing about what I'm passionate about (gadgets, games and movies) allows me to stay sane and wake up with a smile on my face when the alarm clock goes off. PS: this is not true 100% of the time.

Latest from Chema Carvajal Sarabia

You may also like

News

NewsIt returns to Netflix with a fourth season the most iconic series of the platform with a new actor for its main character and many surprises

Read more

News

NewsIf you like Bully, the juvenile delinquency game from the creators of GTA, some modders are creating its definitive version

Read more

Article

ArticleThis iconic animated series has just been renewed for two more seasons

Read more

News

NewsThe game we all were waiting for will be released on Switch 2 with graphical improvements and more news

Read more

News

NewsAn analysis of the black market reveals that more than 300 million records have been compromised so far in 2025

Read more

News

NewsAmazon is making an MMO of The Lord of the Rings, but its future looks very bleak

Read more