Amazon is introducing a groundbreaking tool, Model Evaluation on Bedrock, aiming to transform the evaluation process for AI models. Revealed during the recent AWS re: Invent conference, the tool addresses the challenge of accurately selecting models for specific projects, preventing developers from using models that may not meet accuracy requirements or are too large for their needs.

Inside Amazon’s Bedrock Model Evaluation Revolution

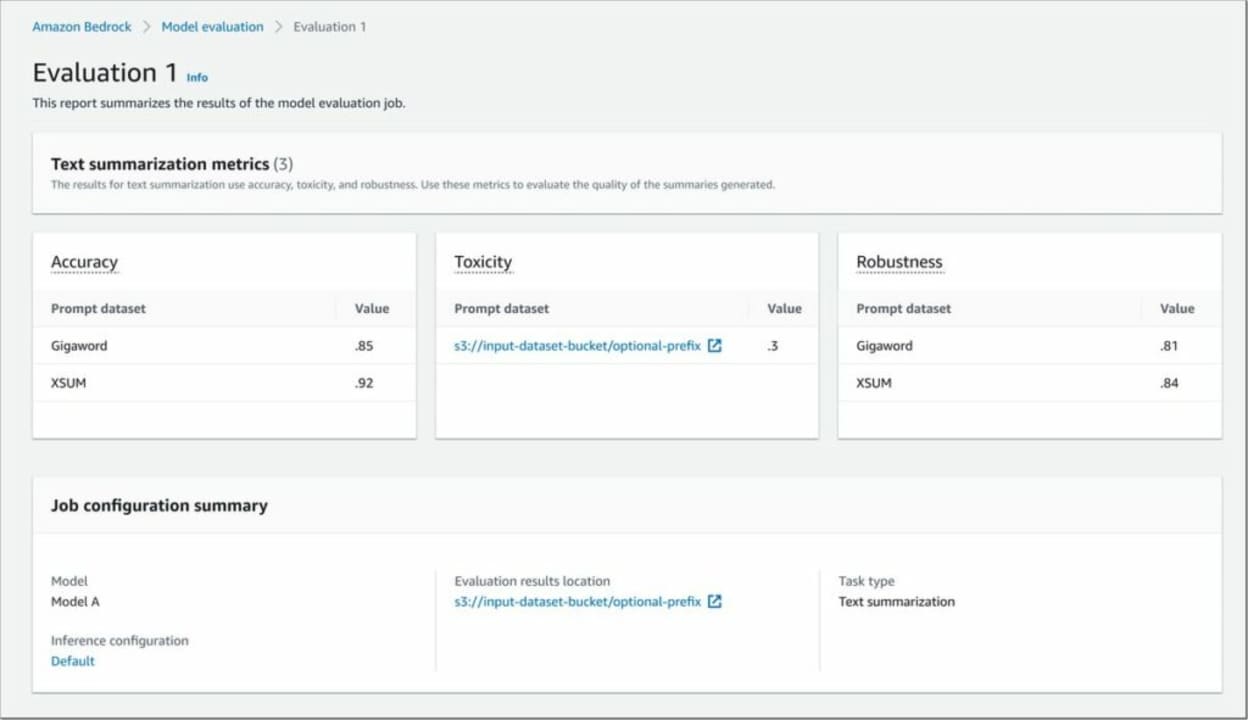

The tool consists of two components: automated evaluation and human evaluation. In the automated version, developers can assess a model’s performance on metrics like robustness and accuracy, covering tasks such as summarization, text classification, question and answer, and text generation. Bedrock includes popular third-party AI models, enhancing the variety of choices available.

AWS provides standard test datasets, but developers can also bring their own data into the benchmarking platform, offering a more realistic evaluation. The system generates a comprehensive report, shedding light on the model’s strengths and weaknesses.

Human benchmarking

For human evaluation, users can collaborate with AWS’s team or use their own resources, specifying task type, evaluation metrics, and preferred datasets. This human touch allows for insights that automated systems may miss, such as empathy or friendliness.

Importantly, Amazon recognizes the diverse needs of developers and doesn’t mandate all customers to benchmark models. This flexibility is particularly beneficial for developers familiar with Bedrock’s foundation models or those with a clear understanding of their preferences.

During the preview phase, AWS will only charge for model inference used during evaluation, making the benchmarking service accessible. This move reflects Amazon’s commitment to facilitating responsible and effective AI practices, providing a tailored solution for companies to measure the impact of models on their projects.

In essence, Amazon’s Bedrock Model Evaluation addresses the ongoing challenge of selecting the right AI models by offering both automated and human-driven evaluations. This initiative aligns with Amazon’s commitment to empowering developers and fostering responsible AI practices in the rapidly evolving landscape of artificial intelligence.