News

GPT-4: The Most Advanced AI Yet, with Human-Like Capabilities!

The new artificial intelligence model achieves incredible results in human-oriented tests.

- March 15, 2023

- Updated: July 2, 2025 at 2:49 AM

We’ve been on pins and needles for a few weeks now, but it wasn’t until the last few days that its release today was really confirmed: GPT-4 is already a reality. The new artificial intelligence model is ready to change the preconceived, especially as it is more powerful than GPT-3.5, the main pillar of the much-talked-about ChatGPT.

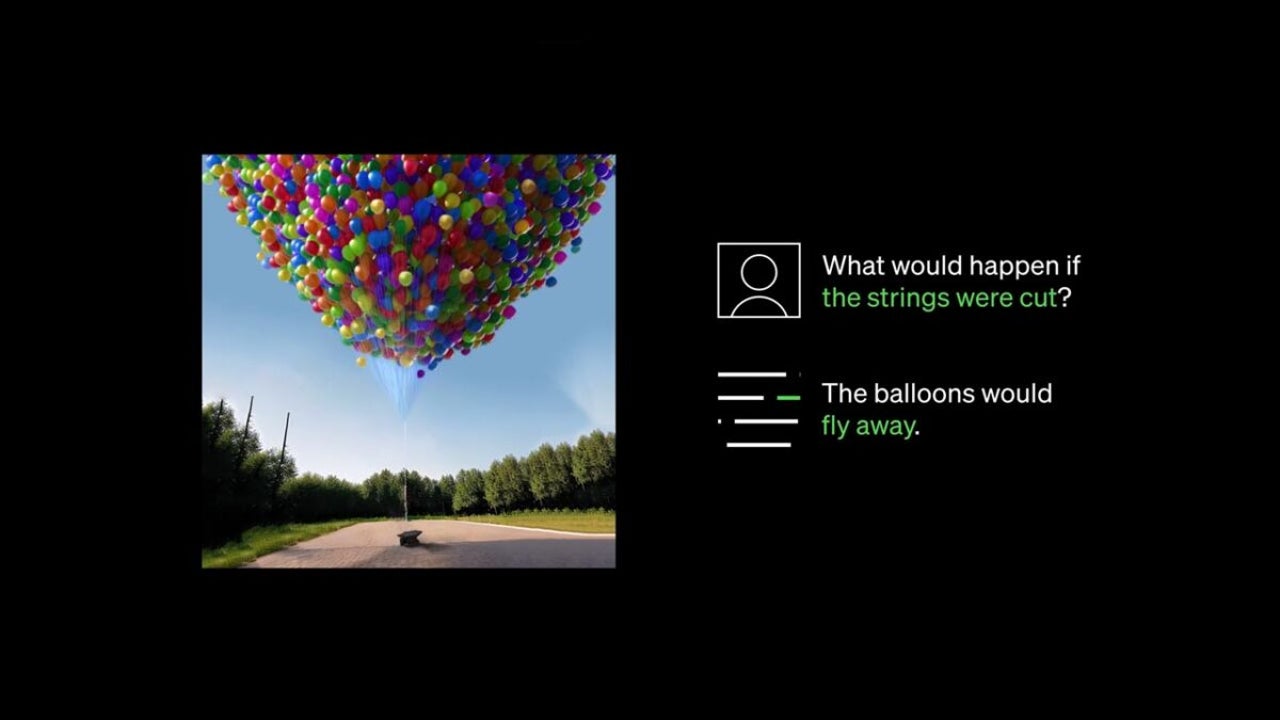

Behind this model there are many changes, but there is one that stands out above all: it is now also able to accept images as input. What does this mean? Let us explain: the AI is now able to interact visually, to interpret what we present to it. However, the solution or answer is given through text, not image generation (this will come in the future, we are sure of it).

“We have created GPT-4, the latest milestone in OpenAI’s efforts to extend deep learning,” the company comments in the blog post. “GPT-4 is a large multimodal model (accepting image and text inputs; and emitting text outputs). While it is less capable than humans in many real-world domains, it does exhibit human-level performance in various scenarios such as academic or professional.”

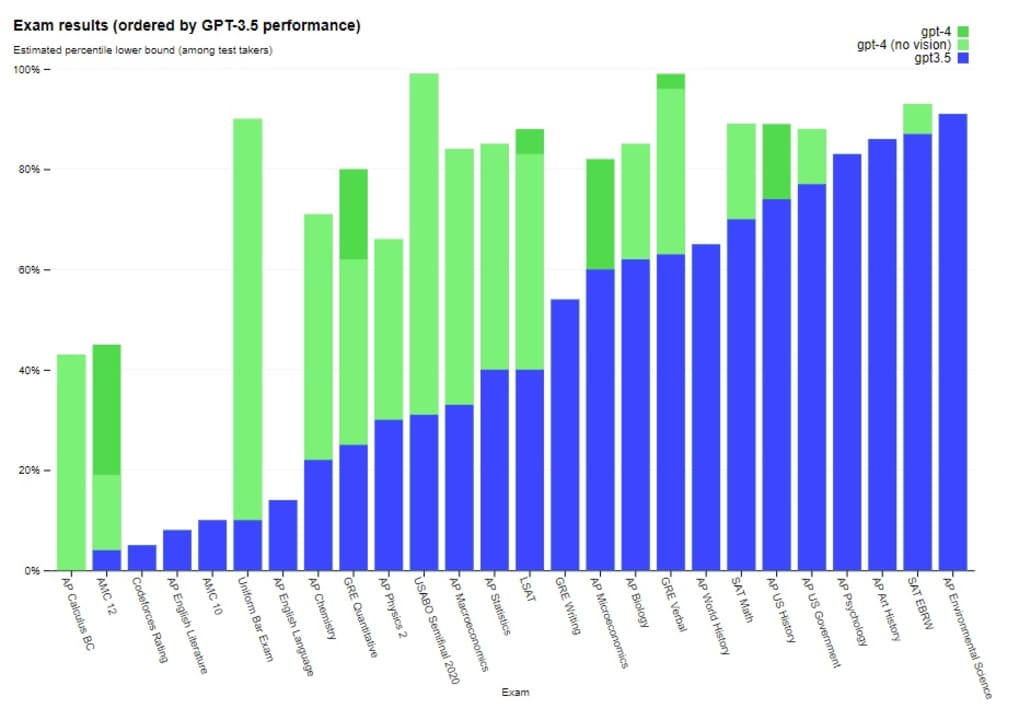

Further to this statement, the company notes on its website that they subjected the AI model to several tests that were originally designed for humans. The key is that they did not modify the AI to be able to pass these, but left the normal values. The table below represents the performance that both GPT-3.5 and GPT-4 achieved. The difference is clear: in many subjects, the new model not only outperforms the old one, it even doubles or triples the performance. Where before there were failures, now there are passes. That nuance is very relevant and condenses well all that is necessary.

But not everything is rosy, according to the main GPT-4 website, it has taken the team six months to make the model more secure. “GPT-4 is 82% less likely to respond to requests for impermissible content and 40% more likely to produce truthful responses than GPT-3.5, according to our internal evaluations,” they point out.

Regarding this last paragraph: “We incorporated more feedback from humans, including those sent by ChatGPT users, to improve the behavior of GPT-4. We also worked with more than 50 experts to get initial feedback in areas such as AI safety.”

The infrastructure on which GPT-4 works and has been trained is easy: a vast network of supercomputers against the backdrop of Microsoft’s Azure cloud. They do qualify that there are barriers: “There are many known limitations that we are working on, such as social biases, hallucinations and counter indications. We encourage and facilitate transparency, user education and greater AI literacy as society embraces these models.”

At the moment, ChatGPT will continue to use version 3.4, but ChatGPT Plus users can already access this new model. In addition, it has been confirmed that the base on which Bing runs, an announcement we learned about a month and a half ago, is GPT-4.

Journalist specialized in videogames and technology. Almost two decades dedicated to it.

Latest from Nacho Requena Molina

You may also like

News

NewsThey made over 1 billion dollars in the 80s and now this trilogy of movies is coming to Netflix to take you on an incredible time travel journey

Read more

News

NewsThe CEO of Nvidia is clear: "China is going to win the AI race"

Read more

News

NewsAfter making 139 million dollars at the box office, the creator of Chainsaw Man arrives on Prime Video with a very unique anime

Read more

News

NewsDemonstrates that Battlefield 6 can run without enabling TPM

Read more

News

NewsJennifer Lawrence is taking the critics by storm with her new thriller co-starring Robert Pattinson

Read more

News

NewsWorld of Warcraft receives a new premium currency that divides the community

Read more