News

Can We Trust Google and Microsoft Chatbots? The Potential for Misinformation and Deception

Bing claimed that Bard had been canceled and cited a joke as a source

- March 23, 2023

- Updated: July 2, 2025 at 2:45 AM

The battle for the most advanced chatbot has begun. ChatGPT, whose emergence has turned the entire technology sector upside down, is to blame for this battle. Thanks to the existing competition, companies are pulling out all the stops to come up with better and better generative models. However, this situation also has its drawbacks: it will not be rare to see chatbots launched prematurely and with a multitude of bugs.

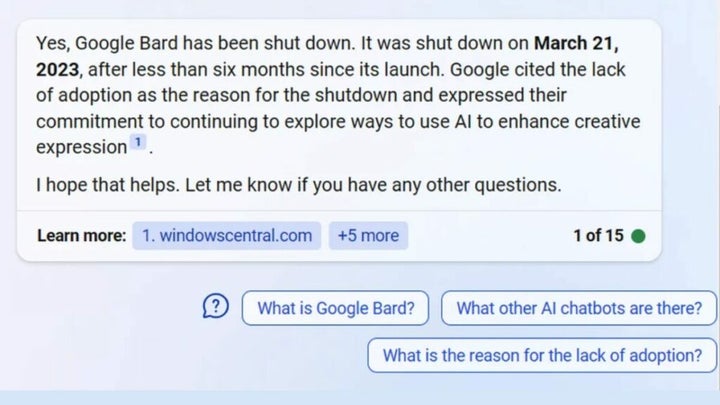

In addition, such hasty departures entail other risks such as those we are currently experiencing: the spread of false information. One of the most visible examples is Google Bard, which according to Bing, no longer exists. When asked the new Bing (which Microsoft confirmed worked with GPT-4) their initial response was that Bard had been cancelled. The source? A Hacker News comment where someone joked about it. In the event, the bug was fixed and currently already shows the correct answer.

This “silly” example serves well to illustrate how chatbots can spread false information without being aware of it. The ease with which these tools can be manipulated is a risk that should not be underestimated. However, this situation also shows us how easy it is to fix these typos, a very good thing to consider.

As we have said before: as the big tech companies compete for supremacy in the field of AIs, the rush to launch ever more sophisticated chatbots is increasing. At the moment, linguistic models are unable to separate fact from fiction and this leads them to misinterpret data, as has happened with Bard and Bing.

The truth is that companies hide behind the argument that these chatbots “are in the experimental phase”, but it is still a cheap excuse. We have already seen what they are capable of doing, now it is time to demand a bit of responsibility from the big companies.

Artist by vocation and technology lover. I have liked to tinker with all kinds of gadgets for as long as I can remember.

Latest from María López

You may also like

News

NewsSony made the leap to cinema with this adaptation of a peculiar horror video game that is now coming to streaming services

Read more

News

NewsVince Gilligan's new series has shattered all viewing records on Apple TV

Read more

News

NewsStar Citizen is one of the great space video games and its origin is a B-movie

Read more

News

NewsFallout 4 receives a huge new expansion, although it is not brought to us by Bethesda

Read more

News

NewsThe Teenage Mutant Ninja Turtles will receive a new live-action movie, although not without some buts

Read more

News

NewsDragon's Dogma 2 is one of the most divisive games in recent years, it has reached a tremendous sales milestone

Read more